Braintrust and Adaline both support llm evaluation and observability, but they differ in how they cover the broader AI development lifecycle and enable cross-team collaboration.

Braintrust is evaluation-first, optimized for systematic testing and CI/CD integration. It’s strong at running eval suites, turning production traces into test datasets, and using GitHub Actions to automate checks. The workflow is largely engineer-led, with code-first evaluation pipelines driving iteration.

Adaline is a collaborative platform that covers the full prompt lifecycle: iteration, evaluation, deployment, and monitoring. Product managers can iterate on prompts without depending on engineering teams. Prompts ship like code, with version control, staging environments, and instant rollback. Teams collaborate in a single workspace rather than passing work back and forth across multiple tools. Evaluations run continuously, but they’re part of a broader workflow that treats prompts as deployable artifacts.

The core difference: Braintrust tells you whether prompts pass tests. Adaline tells you whether prompts pass tests, enables non-engineers to iterate on improvements, and deploys those improvements with production-grade safeguards—all in one collaborative platform.

Braintrust

Braintrust is an AI evaluation and observability platform centered on systematic testing. It offers robust evaluation frameworks with built-in CI/CD support, helping teams catch regressions before they hit production by running automated checks on every pull request.

Application

- Evaluation-first approach: Structured testing using datasets, tasks, and scorer frameworks.

- Built-in CI/CD workflows: GitHub Actions that execute evals and report results on pull requests.

- Brainstore observability: Production logging tailored for AI traces with fast query performance.

- Code-first operation: Engineers build evaluation pipelines via the SDK.

- Loop AI assistant: Tools for prompt optimization and dataset generation.

- Best for: Engineering-led teams that prioritize evaluation rigor and CI/CD integration.

Important Note

Braintrust is strong on evaluation and CI/CD, but most workflows still require engineering involvement. Product managers may prototype in a basic playground, then engineers implement the evaluation pipelines and connect them to deployment. Prompt deployment, version control, and staging workflows are typically handled outside the platform and require custom engineering.

Adaline

Screenshot of observability results in the Adaline dashboard.

Adaline is the collaborative AI development platform built around the complete prompt lifecycle. It integrates iteration, evaluation, deployment, and monitoring so cross-functional teams work together without tool-switching or engineering bottlenecks.

Adaline Editor and Playground that allows users to design and test prompts with different LLMs. Users can test their prompts using tool calls and MCP as well.

Application

- Complete prompt lifecycle: Iterate, evaluate, deploy, and monitor in one platform.

- Independent PM iteration: Product managers test prompts with full evaluation access—no engineering required.

- Production-grade deployment: Version control, dev/staging/prod environments, instant rollback, feature flags.

- Collaborative workspace: PMs validate improvements, engineers pull production-ready code.

- Continuous evaluation: Automated testing on live traffic with quality gates.

- Dataset-driven testing: CSV/JSON upload plus AI-assisted test generation.

- Multi-provider flexibility: Test OpenAI, Anthropic, and Gemini side-by-side.

- Proven results: Reforge reduced deployment time from 1 month to 1 week.

- Best for: Product and engineering teams that need the entire prompt lifecycle managed collaboratively.

Important Note

Companies that treat prompts like deployable code and want non-engineers actively contributing without creating engineering bottlenecks. Organizations where iteration speed matters as much as evaluation rigor.

Core Feature Comparison

Workflow comparison

Braintrust workflow for improving a prompt:

- An engineer writes evaluation code defining the dataset, task, and scorers.

- Run evals programmatically using the SDK or trigger GitHub Action.

- Review results with the PM and provide feedback to engineering.

- Engineer updates prompt in codebase based on feedback.

- Engineer reruns evals to verify improvement metrics.

- Engineer deploys through the standard CI/CD pipeline outside the platform.

- Monitor with Brainstore, and manually create new test cases from production failures.

Adaline workflow for improving a prompt:

- PM identifies the issue in the production traces dashboard.

- PM adds problematic traces to the evaluation dataset with a single click.

- PM iterates on prompt fix in Playground, seeing live eval results.

- PM validates improvement across all test cases immediately.

- Engineer reviews changes and promotes tothe Staging environment.

- Automated evaluations run on staging with pass/fail gates.

- Promote to Production with instant rollback option, continuous monitoring, and automatic.

The practical difference: Adaline eliminates engineering dependencies for prompt iteration. What requires engineering cycles for every prompt change in Braintrust happens independently in Adaline, with engineers maintaining deployment control but not blocking iteration velocity.

Collaboration and Iteration Speed

How Braintrust handles product-engineering collaboration:

Engineers own evaluation pipeline creation. Product managers prototype in the playground but cannot independently run comprehensive evaluations. Each iteration requires engineering to build evaluation code, run tests, and interpret results. Deployment is handled by external CI/CD. PM velocity is gated by engineering availability.

How Adaline handles product-engineering collaboration:

Product managers independently iterate with full evaluation access. They upload datasets, run evaluations, and see quality metrics without engineering. Engineers pull production-ready code from PM experiments, maintaining deployment control. Both roles work in one workspace with shared visibility. PMs validate improvements autonomously, engineers govern releases.

Deployment and Production Safety

Braintrust approach:

Evaluation results inform deployment decisions but actual deployment happens through external CI/CD. Teams build custom prompt version control, staging environments, and rollback mechanisms. Feature flags require separate tooling. Rolling back requires reverting code and redeploying. The platform shows whether changes pass quality gates, but deployment infrastructure is external.

Adaline approach:

Prompts are treated as deployable artifacts with full lifecycle management. Version control with commit messages and diffs. Dedicated dev, staging, and production environments with promotion workflows. Instant one-click rollback. Built-in feature flags for controlled rollouts. Evaluation gates integrate with deployment to automatically prevent bad prompts. Complete audit trails built-in.

Evaluation Capabilities

Braintrust evaluation strengths:

Datasets, tasks, scorers framework is well-architected. AutoEvals library provides comprehensive evaluators. GitHub Actions integration is best-in-class. Brainstore optimized for querying millions of traces. Loop generates datasets, optimizes prompts, creates scorers automatically. Engineering teams get powerful evaluation pipeline tools.

Adaline evaluation strengths:

Built-in evaluators: LLM-judge, text similarity, regex, custom JavaScript/Python. Dataset upload accepts CSV/JSON plus AI-assisted generation. Continuous evaluation on live traffic with drift detection. Pass/fail gates integrate with deployment. One-click eval case creation from production failures. Analytics show quality trends over time. Product managers access same power as engineers.

Evaluation results from testing 40 user queries on a custom LLM-as-Judge rubric.

Both platforms offer strong evaluation. The difference is integration: Braintrust evaluation feeds external deployment. Adaline evaluation controls integrated deployment with automated gates.

When to choose each platform

Choose Adaline when:

- Product managers should iterate without engineering bottlenecks.

- You want prompts versioned, deployed, rolled back like production code.

- Cross-functional collaboration matters—engineering dependencies slow velocity.

- You need the entire lifecycle (iterate, evaluate, deploy, monitor) integrated.

- Non-engineers actively contribute to AI development.

- Time-to-market is critical—ship in hours, not days.

- You want staging environments for safe testing.

- Integrated workflows preferred over multiple tools.

Choose Braintrust when:

- Evaluation rigor and CI/CD integration are top priorities.

- Engineering owns AI workflow with minimal PM involvement.

- You want best-in-class GitHub Actions with eval-on-PR.

- Code-first pipelines preferred over no-code workflows.

- You have ML platform teams to build deployment infrastructure.

- Free self-hosting with unlimited features is required.

- Loop AI for automated optimization is valuable.

- Your workflow handles deployment through external tools.

- You're building version control and deployment separately

Conclusion

For teams building production AI, the choice between Braintrust and Adaline depends on your team structure, workflow priorities, and where bottlenecks exist today.

Braintrust excels as an evaluation and CI/CD platform for engineering-led teams who want rigorous testing integrated into pull requests. If evaluation depth and GitHub integration matter most, and you'll build deployment workflows separately, Braintrust is purpose-built for that use case.

Adaline delivers the complete prompt lifecycle for cross-functional teams where iteration speed and collaboration matter as much as evaluation rigor. Evaluation capabilities are strong but integrated into a broader workflow where PMs iterate independently, prompts deploy with version control, and the entire team collaborates in one workspace.

Choose based on whether you want best-in-class evaluation with CI/CD integration (Braintrust) or best-in-class collaboration with integrated deployment management (Adaline).

Frequently Asked Questions (FAQs)

Which platform is better for cross-functional teams?

Adaline. Product managers iterate independently in the Playground with full evaluation results, comparing prompt variations across models and testing against complete datasets—without engineering dependencies for each change. Engineers pull winning prompts directly into production with version control and deployment controls. Both roles work in one workspace with shared visibility into performance and quality.

Braintrust requires engineering involvement for comprehensive evaluation workflows. Product managers prototype in a basic playground, then engineering builds evaluation pipelines, writes test code, and runs comprehensive tests. Iteration velocity depends on engineering availability, creating bottlenecks when engineers are focused on other priorities.

Can non-engineers meaningfully contribute to AI development?

Absolutely with Adaline. PMs, domain experts, and designers create prompts, upload test datasets, run evaluations, and review results—without code. Engineers maintain deployment control but aren't bottlenecks.

Braintrust is built for engineers. Non-technical users provide feedback but cannot independently run comprehensive evaluations without engineering building infrastructure.

Which platform offers better prompt deployment capabilities?

Adaline. Prompts are treated like production code with version control, dev/staging/production environments, instant rollback, and feature flags. Quality gates prevent bad deployments. Full deployment workflow integrated.

Braintrust focuses on evaluation. Teams deploy through external CI/CD and build custom version control, staging, rollback—typically requiring significant engineering.

How do evaluation capabilities compare?

Both offer strong evaluation. Braintrust provides best-in-class CI/CD with native GitHub Actions, AutoEvals library, and Loop optimization. Evaluation depth is excellent.

Adaline provides comparable depth with continuous evaluation on live traffic, one-click eval creation from failures, and quality gates tied to deployment. Creates closed-loop improvement.

The difference: Braintrust evaluation informs external deployment. Adaline evaluation controls integrated deployment with automated gates.

Which tool helps ship AI features faster?

Adaline for cross-functional teams where engineering dependencies create bottlenecks. By removing engineering requirements from prompt iteration while maintaining deployment governance, teams move faster. Reforge reduced deployment cycles from 1 month to 1 week using Adaline's integrated workflow. Product managers validate improvements independently, engineers deploy with confidence through staging and quality gates.

Braintrust accelerates evaluation velocity for engineering teams who build rigorous testing into their workflow. Notion achieved 10x productivity improvements with systematic evaluation replacing manual review. Speed comes from evaluation confidence reducing manual QA cycles, not from removing engineering dependencies.

Choose based on your bottleneck: If evaluation rigor slows you down, Braintrust accelerates that. If engineering dependencies for iteration create delays, Adaline eliminates those bottlenecks.

Can I self-host each platform?

Both support self-hosting with different approaches. Braintrust offers free self-hosting with no feature limits or restrictions, making it accessible to teams of all sizes including open-source projects and cost-conscious organizations.

Adaline provides self-hosting for enterprise customers with on-premise deployment or virtual private cloud options. Solutions support compliance requirements like SOC 2 and HIPAA with complete data residency control. All data stays in your controlled environment.

If self-hosting without enterprise contracts is required, Braintrust provides more accessible options for smaller teams.

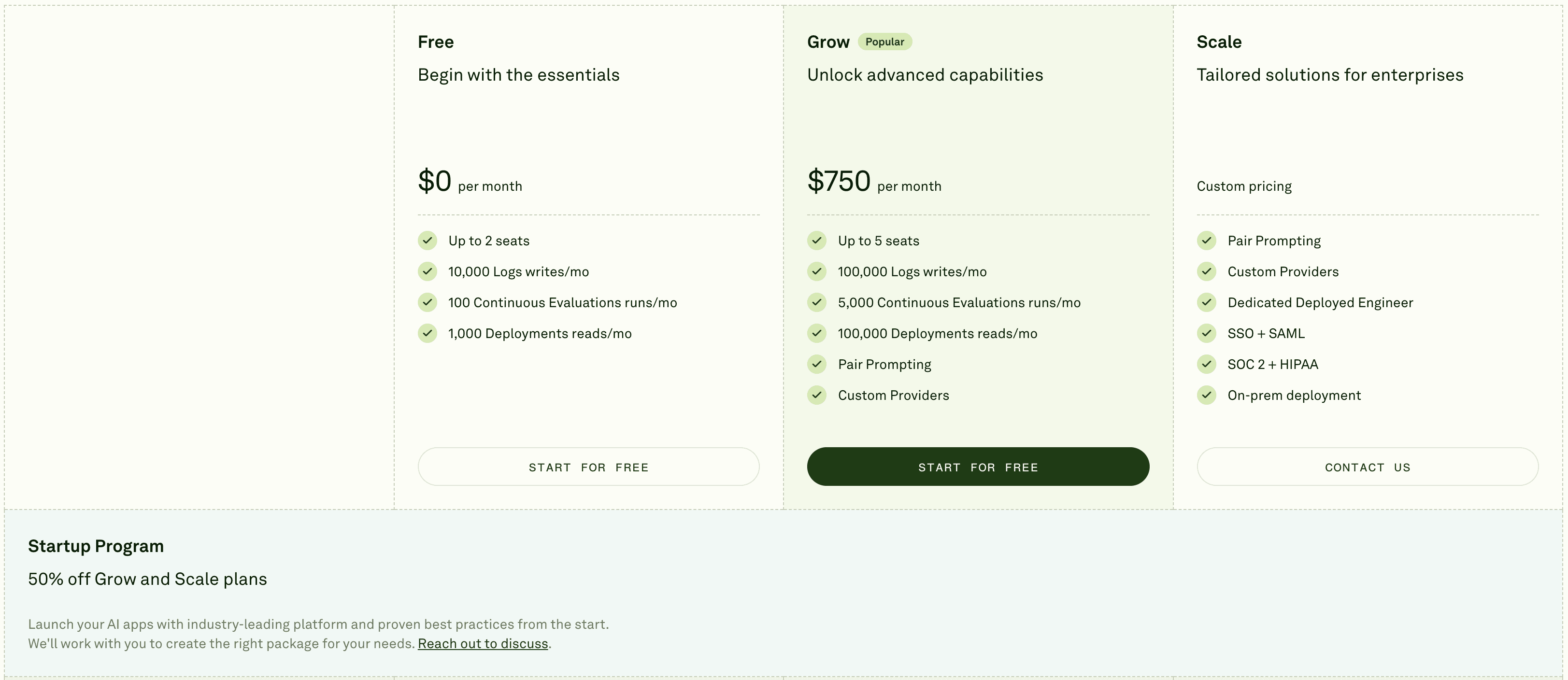

How does pricing compare?

Braintrust offers generous free tiers and free unlimited self-hosting, making it accessible for smaller teams, open-source projects, and organizations building custom infrastructure anyway.

Adaline pricing starts at $750/month (Grow tier with 5 seats). However, factor in engineering time saved: building prompt deployment pipelines, version control systems, staging environments, rollback mechanisms, and PM collaboration tools typically requires 4-8 weeks of senior engineering time—$50K-100K in labor costs—often exceeding Adaline's subscription cost.

Adaline's price offering.

For small teams or those building custom infrastructure, regardless, Braintrust’s pricing is more accessible. For teams wanting integrated workflows without infrastructure development overhead, Adaline's ROI becomes clear when engineering time is considered.

Which platform is better for AI agents versus single prompts?

Adaline and Braintrust both excel at evaluating multi-step agent workflows with comprehensive tracing through complex chains.

Adaline handles both single prompts and multi-turn conversations with trace support for agent workflows. The deployment and collaboration advantages—version control, staging environments, cross-functional iteration—apply equally to agents and prompts. Choose based on whether agent-specific evaluation depth or integrated lifecycle management with deployment workflows matters more for your use case.