Langfuse and Adaline both support LLM observability and prompt management, but they diverge in scope and in how they structure the AI development lifecycle.

Langfuse is an open-source observability platform centered on tracing, monitoring, and analytics. It offers core primitives for tracking LLM behavior, which engineering teams typically compose into their own operational workflows.

Adaline, on the other hand, is a complete collaborative platform for the entire prompt lifecycle. It handles iteration to evaluation, deployment, and monitoring. Production traces inform systematic improvements. Product managers and engineers work together in one unified workspace. Prompt changes deploy like code with version control, staging environments, and instant rollback. Evaluation runs continuously on live traffic to catch regressions before users notice.

The core difference: Langfuse shows you what happened in production. Adaline shows you what happened, helps you fix it systematically, lets non-engineers collaborate on improvements, and deploys changes with production-grade safety—all in one platform.

Langfuse

Langfuse is an open-source LLM observability platform that offers end-to-end tracing and monitoring for LLM applications. It helps teams understand how their AI behaves in production by surfacing detailed traces and analytics dashboards.

Langfuse Application

- Observability and tracing: granular visibility into llm calls and application behavior in production.

- Analytics dashboards: track usage patterns and monitor costs across your llm stack.

- Open source flexibility: mit-licensed, with strong self-hosting guidance and infrastructure control.

- Building blocks approach: provides foundational observability primitives that teams can tailor and extend.

- Best for teams with DevOps capacity that want to build their own evaluation and deployment workflows on top of observability.

Summary

Langfuse is strong on observability, but it largely ends at visibility. Turning production findings into repeatable improvements typically requires additional engineering work. This includes:

- 1Converting traces into evaluation datasets.

- 2Implementing evaluators, wiring results into deployment pipelines

- 3Setting up collaboration processes between product and engineering teams.

Adaline

Adaline Editor and Playground that allows users to design and test prompts with different LLMs. Users can test their prompts using tool calls and MCP as well.

Adaline is a collaborative AI development platform built around the complete prompt lifecycle. It connects observability directly to systematic improvement, enabling teams to iterate faster while maintaining production quality.

Adaline Application

- Complete prompt lifecycle: Iterate, evaluate, deploy, and monitor in one platform that eliminates tool-switching.

- Cross-functional collaboration: PMs, engineers, and domain experts work together without handoffs.

- Production-grade deployments: Version control, dev/staging/prod environments, and instant rollback.

- Continuous evaluation: Automatically test live traffic to detect quality drift, cost spikes, or latency increases.

- Multi-provider playground: Test prompts across OpenAI, Anthropic, Gemini, and custom models side-by-side,

- Dataset-driven testing: Upload CSV/JSON files and run evaluations on hundreds or thousands of test cases.

- Proven results: Reforge reduced deployment time from 1 month to 1 week. Teams report 50% faster iteration cycles.

Summary

Adaline helps users to monitor their AI product with traces and spans.

Product and engineering teams shipping AI features to real users who need speed, quality, and collaboration—not just visibility. Companies that treat prompts like production code and want to catch issues before customers encounter them. Organizations where non-technical stakeholders actively contribute to AI feature development.

Core Feature Comparison

Workflow Comparison

Langfuse workflow when a production issue occurs:

- Spot the failure in the production traces dashboard.

- Manually export the relevant examples to an external eval tool or spreadsheet.

- Create or update evaluation scripts (Python or JavaScript).

- Run evaluations outside the platform using separate infrastructure.

- Apply prompt changes in your codebase based on the results.

- Ship via your existing CI/CD pipeline without the need for prompt-specific safeguards.

- Monitor again, then repeat the cycle.

Adaline workflow when a production issue occurs:

Traces and spans in the Adaline dashboard.

- Identify failure in the production traces dashboard.

- Click to add a problematic trace to the evaluation dataset instantly.

- Iterate on prompt fix in Playground with real evaluation results showing improvement.

- Deploy to the Staging environment with one click for safe testing.

- Run automated evaluations on staging with pass/fail gates.

- Promote to Production with an instant rollback option if metrics degrade.

- Continuous evaluation monitors live traffic automatically to confirm the fix holds.

- The practical difference: Adaline eliminates the need for weeks of custom engineering. What requires building prompt versioning systems, deployment pipelines, evaluation frameworks, dataset management tools, and collaboration interfaces with Langfuse is production-ready in Adaline from day one.

Collaboration and Team Dynamics

How Langfuse handles cross-functional collaboration:

Langfuse is developer-focused with code-first workflows. PMs prototype ideas in ChatGPT or spreadsheets, then hand requirements to engineering. Engineers translate experiments into production code. Changes require engineering for deployment. Documentation scatters across tools.

How Adaline handles cross-functional collaboration:

Evaluation results from testing 40 user queries on a custom LLM-as-Judge rubric.

Adaline provides a unified workspace where PMs and engineers iterate together. PMs test prompts in the Playground with real evaluation data—no coding required. Engineers pull production-ready code directly from PM experiments. Prompt changes track like commits with full audit trails. Everyone sees performance, deployment status, and quality metrics in real-time.

Deployment and Production Safety

Langfuse approach:

No built-in deployment management. Teams build custom version control for prompts. No staging environments for safe testing. Rollback requires manual code changes. Feature flags require external tools. Production safety relies on general CI/CD, not prompt-specific safeguards.

Adaline approach:

Adaline offers users to create their own environments for every stage of their development.

Prompts are treated as deployable artifacts with full version control. Dev, staging, production, and custom environments included. Promote prompts after evaluation gates pass. Instant one-click rollback minimizes downtime. Built-in feature flags enable controlled rollouts. Quality gates prevent bad prompts from reaching production.

Evaluation capabilities

Langfuse evaluation:

Requires external frameworks and custom coding. Teams export traces, build evaluation scripts, and manually correlate results to prompts. No built-in evaluators or quality metrics. Creating systematic workflows requires significant infrastructure development that teams maintain alongside features.

Adaline evaluation:

Built-in framework includes LLM-as-judge scoring, text similarity, regex checks, and custom JavaScript or Python logic. Upload datasets with hundreds or thousands of test cases. Run automated evaluations and see pass/fail rates, quality scores, token usage, latency, and cost—all in-platform. Continuous evaluation runs on live traffic automatically. Pass/fail gates integrate with deployments to prevent regressions.

When to Choose Each Platform

Choose Adaline when:

- You ship AI features to real users and need the entire prompt lifecycle managed.

- Product managers should participate in iterations without engineering bottlenecks.

- You want prompts versioned, deployed, and rolled back like production code.

- You need built-in evaluation frameworks instead of weeks of custom engineering.

- Cross-functional collaboration matters—scattered tools slow iteration.

- You're scaling AI and need dataset-driven systematic testing.

- Time to market is critical—ship improvements in hours, not weeks.

- You want continuous monitoring to catch regressions before users notice.

- Cost optimization matters alongside quality.

- You need multi-provider testing without rewriting integrations.

- Non-engineers contribute domain expertise to prompt development.

Choose Langfuse when:

- You primarily need observability without immediate deployment or evaluation needs.

- You have DevOps resources to build versioning, deployment, and evaluation infrastructure.

- Open-source transparency and self-hosting are hard requirements (non-enterprise).

- Engineering owns AI workflow end-to-end with minimal PM collaboration.

- You're comfortable managing ClickHouse, Redis, and S3 infrastructure.

- You're in research phase without immediate production deployment.

- Your team prefers assembling best-of-breed tools over integrated platforms.

- Budget is constrained, and you can dedicate engineering to building missing capabilities.

Conclusion

For most teams building production AI applications, Adaline provides the complete collaborative platform that Langfuse requires you to build yourself.

Langfuse excels as an observability foundation for teams with strong DevOps who want to construct custom workflows. Adaline delivers end-to-end where observability drives improvement, teams collaborate seamlessly, and prompts deploy with production-grade safety.

The choice depends on your team structure, timeline, and whether you want to build infrastructure or ship AI features.

Frequently Asked Questions (FAQs)

Which tool is better for cross-functional teams?

Adaline. Product managers iterate in the Playground with live evaluation results, compare variations across models, and test against real datasets—no coding required. Engineers pull winning prompts directly into production with version control. The entire team sees performance, quality metrics, and deployment status in one workspace.

Langfuse is developer-centric. PMs experiment in external tools like ChatGPT, then engineering rebuilds solutions in code. Collaboration requires more engineering time and creates translation gaps.

Can I deploy prompts safely with version control?

Adaline treats prompts like production code. Every prompt has a complete version history with commit messages, diffs, and metadata. Promote through dev, staging, and production environments after testing. Roll back with one click if issues arise. Feature flags enable controlled rollouts.

Langfuse provides basic versioning but no deployment management. Teams build their own environment separation, promotion workflows, and rollback strategies—typically requiring weeks of engineering.

Which platform is better for systematic evaluation?

Adaline provides built-in evaluators: LLM-as-judge, text similarity, regex checks, and custom JavaScript/Python logic. Upload datasets with hundreds or thousands of test cases. Run automated evaluations showing pass/fail rates, quality scores, token usage, and cost. Continuous evaluation detects drift automatically on live traffic.

Langfuse requires external tools and custom engineering. Teams export traces, build scripts, and manually correlate results to prompts. Converting insights into improvements takes significant development time.

How does pricing compare when you factor in engineering time?

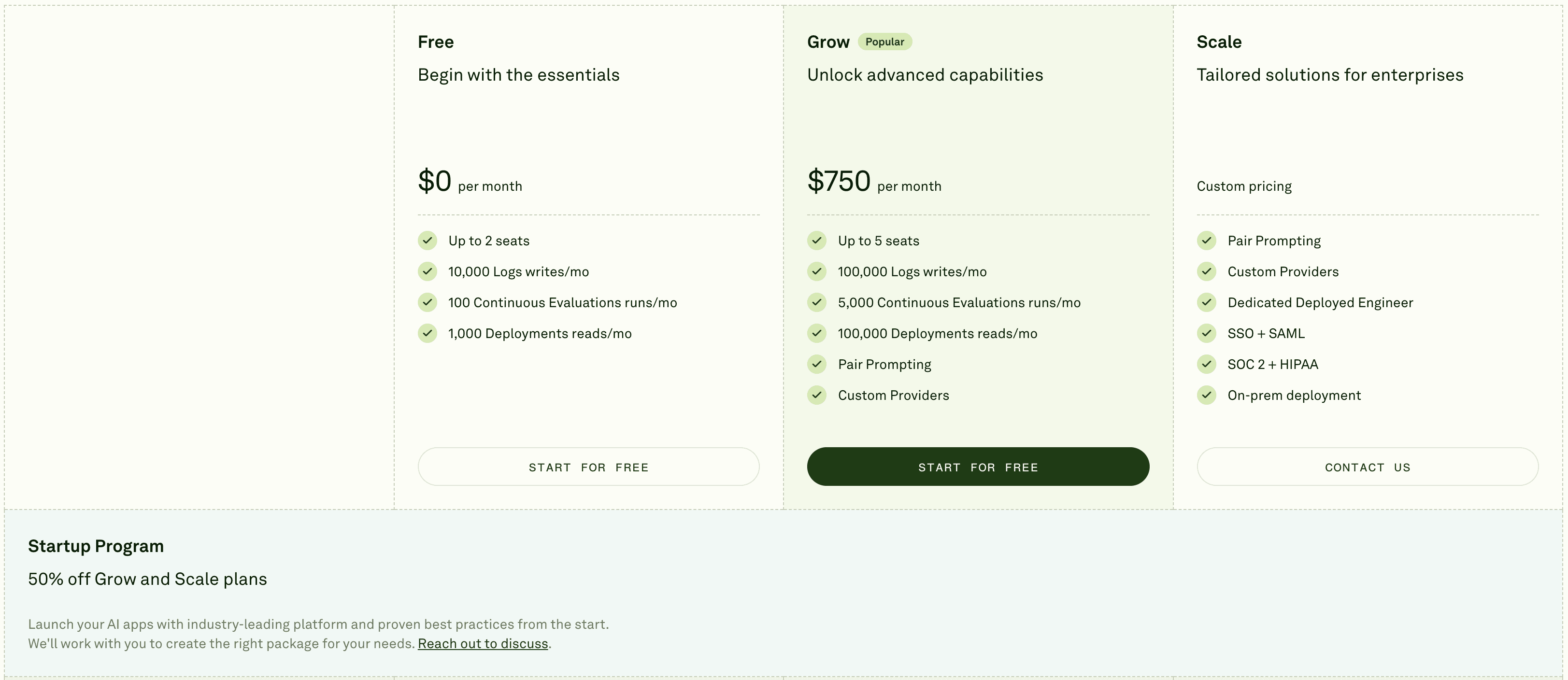

Adaline's pricing includes the complete lifecycle: iteration, evaluation, deployment, monitoring, and collaboration. Typical engagement starts at $750/month (Grow). All capabilities are included immediately.

Adaline's price offering.

Langfuse starts cheaper ($199/month vs. $750/month). However, building prompt deployment pipelines, evaluation frameworks, dataset management, and PM collaboration tools typically requires 4-8 weeks of senior engineering time—$50K-100K in labor. Most teams spend more building what Adaline includes than Adaline's subscription cost.

Can I test multiple LLM providers easily?

Adaline. The Playground supports OpenAI, Anthropic, Cohere, and custom models through one interface. Switch providers with a dropdown—no code changes. Run identical prompts across providers side-by-side and compare outputs, costs, and latency. One OpenAI-compatible API accesses multiple vendors.

Langfuse focuses on observability across providers but doesn't provide a unified testing interface. Teams integrate each SDK separately and build custom comparison tools.

Does Adaline support self-hosting?

Yes, for enterprise customers. On-premise deployment or VPC options available for organizations with strict compliance requirements (SOC 2, HIPAA). All data stays in your controlled environment.

Langfuse provides documented self-hosting for all users with control over ClickHouse, Redis, and S3. If self-hosting is required without enterprise contracts, Langfuse offers more accessible options.

Which tool helps me ship AI features faster?

Adaline. Integrated iteration, evaluation, deployment, and monitoring eliminates weeks of engineering work. PMs prototype without bottlenecks. Engineers deploy with confidence. Issues caught in staging never reach production. Reforge reduced deployment cycles from 1 month to 1 week using Adaline.

Can non-technical team members contribute to prompt development?

Absolutely with Adaline. Domain experts, PMs, and designers create and test prompts in the Playground, upload datasets, run evaluations—all without code. Engineers maintain deployment control.

Langfuse is built for developers. Non-technical stakeholders cannot meaningfully contribute without engineering support, creating bottlenecks.

How long until I see value from each platform?

Adaline: Value within the first week. Day one, create prompts, upload data, run evaluations. Within a week, critical prompts will be tested with quality baselines. First staging deployments in 1-2 weeks.

Langfuse: Immediate observability value—traces on day one. Full value (connecting to evaluation, deployment, improvement) requires building custom infrastructure, typically 4-8+ weeks.

What if I already use LangChain or other frameworks?

Adaline integrates seamlessly. Continue using LangChain or Haystack while benefiting from Adaline's management. Route calls through Adaline's API/SDK or import logs afterward. Complements existing stack rather than forcing replacement.

Langfuse also integrates well through callbacks with strong LangChain support. Both work alongside existing infrastructure.