Large Language Model APIs represent a transformative opportunity for product development, offering sophisticated AI capabilities without requiring specialized expertise. These powerful interfaces allow teams to integrate advanced language processing directly into their products, dramatically accelerating time-to-market while reducing technical overhead. Understanding how to effectively implement and optimize LLM APIs can significantly enhance your competitive advantage in today's AI-driven landscape.

This guide explores the technical foundations of LLM APIs, from architecture and integration to key parameters like temperature and token limits that directly impact performance. You'll learn about the strategic trade-offs between using APIs versus custom-built models, and how to navigate the technical capabilities and limitations that will impact your implementation decisions.

Leveraging LLM APIs creates immediate business value through faster development cycles, reduced costs, and enhanced product capabilities. The right implementation can transform user experiences through natural language interfaces, automated content generation, and intelligent assistance—all while carefully managing implementation challenges like cost control, prompt engineering, and quality assurance.

This comprehensive resource covers:

- 1Technical foundations of LLM API architecture and integration

- 2Strategic value assessment for product development

- 3Implementation architecture and security considerations

- 4Comparative analysis of leading providers

- 5Performance measurement and ROI calculation

- 6Practical implementation roadmap for product teams

Understanding LLM APIs: Technical Foundations

In this section, we'll explore the fundamental architecture and capabilities that make LLM APIs such powerful tools for product teams.

Architecture and integration with product workflows

LLM APIs provide developers with access to sophisticated language models without requiring specialized AI expertise. These APIs serve as intermediaries between your product and the underlying language model infrastructure, handling complex processes like tokenization, embedding generation, and response creation. The API architecture enables seamless integration with existing product development workflows through standard REST or GraphQL interfaces.

LLM APIs typically consist of three components:

- 1The request handler

- 2The model processor

- 3The response formatter

This modular design allows for scalable implementations across diverse use cases from content generation to conversational interfaces.

Core API parameters and their impact

Understanding key API parameters is essential for optimizing response quality:

Response quality is significantly influenced by these parameters, with optimal settings varying by use case. For instance, customer support applications might benefit from low temperature settings, while creative writing would leverage higher values.

API advantages vs custom-built models

LLM APIs offer several distinct advantages over custom-built models:

- 1

Inference speed

Cloud-based API infrastructure is optimized for fast response times, typically achieving 5-10x faster inference than self-hosted models on comparable hardware. - 2

Resource requirements

APIs eliminate the need for specialized GPU hardware and the associated maintenance costs, significantly reducing the technical overhead. - 3

Maintenance

API providers handle model updates, security patches, and infrastructure scaling, freeing development teams to focus on product features rather than model maintenance.

One single-sentence consideration worth noting is that this convenience comes with potential trade-offs in customization flexibility.

Technical capabilities and limitations

Current LLM APIs excel at tasks like:

- Text generation

- Summarization

- Basic conversational interactions

While technological advances have addressed many early limitations, some challenges remain:

- 1

Context window management

Though modern APIs offer vastly expanded context windows (GPT-4.1 and Gemini 2.5 Pro with 1 million tokens, Sonnet 3.7 with 200,000 tokens), effectively utilizing this capacity requires careful prompt design and token management. Larger contexts also consume more tokens, directly impacting costs. - 2

Latency considerations

Response times increase with larger inputs and more complex instructions, creating potential UX challenges for real-time applications. Even with powerful models, there's a direct relationship between context size, reasoning complexity, and response time. - 3

Cost scaling

While context windows have grown, the cost per token remains significant for high-volume applications. Organizations must implement optimization strategies to balance capability with economics. - 4

Throughput management

Production deployments still face API rate limits and concurrent request constraints that can restrict throughput during high-demand periods, requiring implementation of queuing systems or fallback mechanisms.

Understanding these technical boundaries is crucial for designing resilient products. These boundaries can gracefully handle API limitations while maximizing the powerful text processing capabilities these models provide.

With a solid understanding of the technical foundations, we can now examine how these capabilities translate into strategic value for product development.

Strategic value of LLM APIs in product development

Now that we understand the technical underpinnings, let's explore how LLM APIs deliver concrete business value across various product contexts.

LLM APIs offer product teams powerful capabilities to integrate AI without specialized expertise. These APIs provide strategic advantages that can significantly impact product development outcomes and operational efficiency.

Faster time-to-market with reduced costs

LLM APIs enable teams to rapidly deploy sophisticated language capabilities without building AI infrastructure from scratch. This acceleration can reduce development cycles by weeks or months. Teams can focus on their core product features while leveraging pre-trained models for functions like content generation, summarization, and sentiment analysis.

The cost reduction is substantial when compared to developing custom solutions. One team can accomplish what previously required:

- Specialized AI researchers

- Data scientists

- Extensive computing resources

Enhanced product capabilities through AI integration

Integrating LLM APIs creates immediate value through advanced features that would otherwise be impractical to develop internally. Product teams can implement natural language interfaces, automated content generation, and intelligent assistance within existing applications.

These capabilities enable more personalized user experiences through context-aware interactions. Products can adapt to individual users based on their history, preferences, and behaviors.

User engagement typically increases when products understand and respond to natural language. This creates more intuitive interfaces that reduce friction and learning curves.

Competitive differentiation and strategic positioning

In competitive markets, LLM integration can provide significant differentiation. Products that incorporate intelligent features often command premium positioning and higher perceived value.

Teams must carefully evaluate implementation options based on their specific requirements. The selection process should consider:

- Provider capabilities

- Pricing models

- Rate limits

- Compliance features

Strategic considerations include aligning LLM implementation with business objectives and user needs. Teams should prioritize use cases that create maximum value while being technically feasible.

Implementation considerations and challenges

Despite their benefits, LLM APIs present implementation challenges that require careful management. Cost control becomes critical as usage scales, requiring monitoring and optimization strategies.

Prompt engineering presents a technical challenge that significantly impacts output quality. Skilled prompt design is essential for achieving consistent, relevant responses from LLMs.

Quality control mechanisms must be established to prevent:

- 1Hallucinations

- 2Inaccurate outputs

- 3Inconsistent responses

This includes developing testing frameworks and monitoring systems.

Risk mitigation through established APIs

Using established LLM APIs provides significant risk advantages compared to custom solutions. Leading providers maintain compliance frameworks addressing privacy, security, and ethical concerns.

Established APIs typically undergo continuous improvement, with providers regularly updating models to address vulnerabilities and enhance capabilities. This reduces the maintenance burden on product teams.

By leveraging APIs from trusted providers, teams can focus on their core expertise while benefiting from specialized AI capabilities developed by industry leaders.

Now that we've examined the strategic benefits of LLM APIs, let's explore the technical architecture needed for successful implementation.

Technical implementation architecture for LLM APIs

With an understanding of the strategic value in place, this section explores the critical technical infrastructure required to successfully deploy LLM APIs in production environments.

Overview of LLM API architecture | Source: Mastering LLM Gateway: A Developer’s Guide to AI Model Interfacing

API gateway patterns for secure integration

Implementing LLM APIs requires a robust architecture that ensures security and performance. A well-designed API gateway serves as the central point for managing access to LLM services, handling authentication, and enforcing rate limits. This gateway should include data masking components to detect and protect personally identifiable information (PII) before it reaches the model. Additionally, comprehensive audit logging must track all inputs, outputs, and errors for compliance and debugging purposes.

For secure implementation, the API gateway should support:

- End-to-end encryption for data in transit

- Token-based authentication with proper access controls

- Alignment with geographic and industry-specific privacy regulations

Performance optimization techniques

Several techniques can significantly improve LLM API performance and reduce costs:

Token efficiency

Optimizing prompts is crucial for reducing token usage. Careful prompt engineering can decrease input size while maintaining context, directly lowering costs since most providers charge per token processed.

Caching mechanisms

Implementing response caching for frequently requested or identical prompts can dramatically reduce API calls. Semantic caching, which stores responses based on meaning rather than exact text matching, provides even greater efficiency gains for similar but non-identical queries.

Parallel processing

For complex workflows involving multiple LLM operations, parallel processing can minimize overall latency. This approach requires careful orchestration to maintain context across concurrent operations while maximizing throughput.

Security protocols for LLM API integration

The security framework for LLM APIs must include multiple layers of protection:

These security measures must be balanced with performance considerations to maintain acceptable response times.

Cross-functional collaboration requirements

Successful LLM API implementation requires coordination across multiple teams:

- 1Data engineers to design efficient data pipelines and preprocessing workflows

- 2Security specialists to implement and audit protection measures

- 3Cloud engineers to optimize infrastructure and manage scaling

- 4Product managers to define use cases and performance metrics

- 5Legal and compliance teams to ensure regulatory adherence

Clear documentation and communication channels between these teams are essential, particularly when addressing issues that span multiple domains such as security incidents or performance bottlenecks.

Regular performance testing with diverse input types and lengths is necessary to ensure robust operation under various conditions. Organizations should implement comprehensive monitoring for cost, performance, and audit trails while maintaining this cross-functional collaboration throughout the deployment lifecycle.

With the technical implementation architecture established, it's important to evaluate the different API providers available in the market.

Comparative analysis of leading LLM API providers

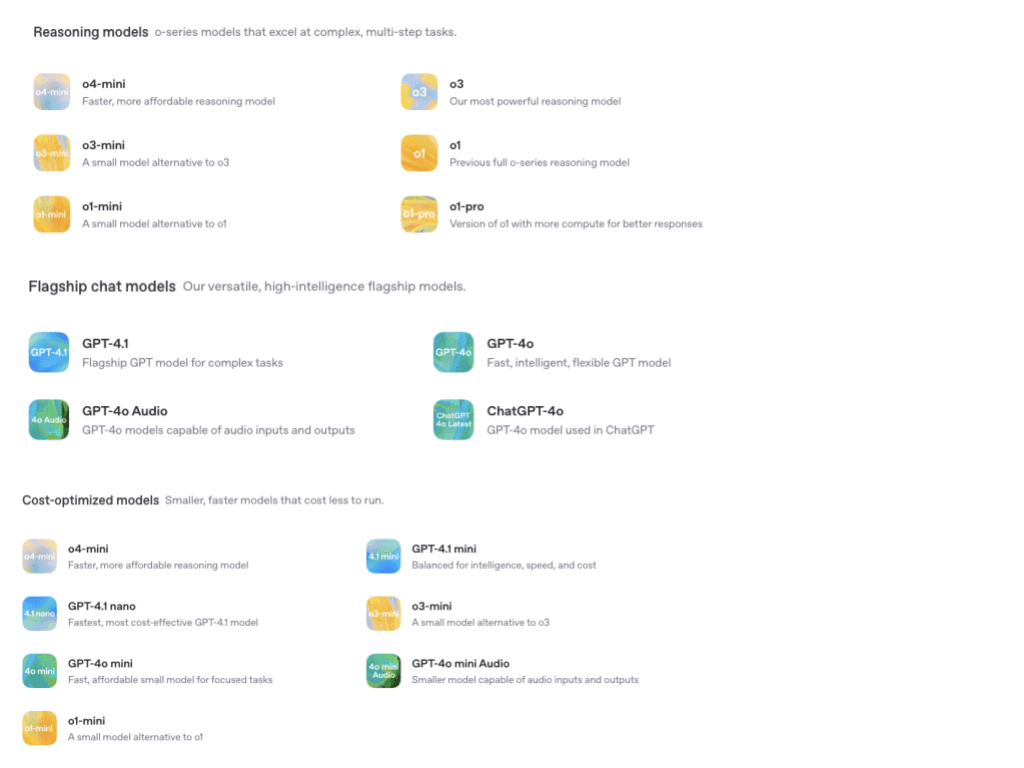

API overview of models provided by OpenAI | Source: OpenAI API

Now that we've covered implementation architecture, let's examine how the major LLM API providers compare across key dimensions to help inform your selection process.

Technical capability comparison

API overview of models provided by Google AI | Source: Gemini models

Different LLM API providers offer varying levels of performance and capabilities:

Each provider has distinct strengths in specific tasks:

- Claude demonstrates exceptional performance in graduate-level reasoning with Extended Thinking Mode

- Gemini 2.5 Pro shows leading capabilities in multimodal tasks and benchmarks

- GPT-4.1 offers strong coding proficiency and reliable format adherence

Pricing structure analysis

API overview of models provided by Anthropic AI | Source: Anthropic AI

The cost of accessing LLMs has decreased significantly over time, with pricing now typically per million tokens rather than per thousand. Current pricing examples show considerable variation:

This pricing spectrum allows organizations to balance quality requirements against budget constraints. Budget-friendly options like GPT-4.1 Nano ($0.10/$0.40) and Gemini 2.0 Flash ($0.10/$0.40) offer cost-effective alternatives for simpler tasks.

Implementation complexity assessment

Implementation complexity varies across providers. Key considerations include:

- SDK availability in major programming languages

- Model parameters for deterministic responses

- Fine-tuning capabilities

Most major providers offer enterprise plans with:

- Additional features

- Higher usage limits

- Dedicated support

API providers typically impose daily usage limits that can be increased with consistent usage. Each provider's API allows adjustment of parameters like temperature (controlling randomness) and top-p (limiting token options), which must be optimized for specific tasks.

Performance benchmarks

Performance metrics are critical when comparing LLM APIs:

Reasoning capabilities:

- 1Gemini 2.5 Pro leads on several benchmarks including GPQA Diamond (84.0%)

- 2Sonnet 3.7 with Extended Thinking achieves similar results (84.8% on GPQA)

- 3GPT-4.1 scores strongly on MMLU (90.2%) but lower on specialized reasoning tests

Latency measurements:

- 1Specialized models like GPT-4.1 Nano and Gemini Flash variants offer low latency

- 2Claude's Extended Thinking mode increases latency significantly (8 seconds to 70 seconds)

- 3Google's Live API enables low-latency streaming interactions

The cost-to-performance ratio is another essential metric, revealing how efficiently each model delivers results relative to its price. For enterprises with specific requirements, custom benchmarks can better assess performance in domain-specific tasks, providing more relevant comparisons than general leaderboards.

When selecting an LLM API, organizations must balance these technical capabilities, pricing structures, implementation requirements, and performance benchmarks against their specific use cases and budget constraints.

Understanding provider differences is crucial, but equally important is establishing how to measure the success of your LLM API implementation.

Measuring performance and ROI of LLM API integration

Having compared the major providers, we now turn to the critical task of measuring performance and calculating the return on investment for your LLM API implementation.

Framework for calculating ROI

Implementing LLM APIs requires careful measurement of both technical performance and business impact. A robust ROI framework should evaluate token consumption, latency metrics, and business outcomes against implementation costs. For optimal results, track both pre-implementation baselines and post-implementation improvements to quantify the actual value delivered.

Organizations should focus on token optimization strategies as this directly impacts costs. With techniques like chain-of-thought prompting and in-context learning increasing prompt sizes, efficient token usage becomes increasingly important for controlling expenses.

Key performance metrics

Several technical metrics are critical when evaluating LLM API performance:

These metrics provide a comprehensive view of both the technical performance and financial efficiency of your LLM implementation.

Business impact measurement

Beyond technical metrics, measuring business impact requires tracking:

- 1

Task completion rates

How effectively users accomplish their goals - 2

User satisfaction

Feedback scores and engagement metrics - 3

Time savings

Reduction in time to complete specific workflows - 4

Conversion rates

Effect on desired user actions like purchases - 5

User retention

Changes in customer loyalty metrics

Establishing clear service-level objectives for each metric helps align technical performance with business goals.

Optimization case study

A SaaS startup initially spent $14,000 monthly on LLM API costs before implementing optimization strategies. By applying:

- Prompt compression

- Caching for repetitive queries

- Routing simpler requests to smaller models

They reduced monthly costs to $3,200 while maintaining quality.

This 77% cost reduction was achieved through systematic analysis of usage patterns and strategic optimization rather than simply reducing functionality. The improved system delivered comparable performance at significantly lower cost, demonstrating the value of targeted optimization efforts.

Continuous monitoring and regular optimization reviews ensure ongoing ROI improvements as usage patterns and business needs evolve.

With a clear framework for measuring success, let's now develop a practical roadmap for implementing LLM APIs in your product.

Implementation roadmap for product teams

Now that we've covered the technical, strategic, and measurement aspects of LLM APIs, let's outline a practical implementation roadmap to guide your team through the process.

Methodology for defining use cases and technical requirements

Start your LLM implementation by clearly defining specific use cases for your product. Identify where an LLM API can add value to your users. Focus on tasks that benefit from natural language processing capabilities.

Document technical requirements for each use case:

- Input formats

- Expected outputs

- Response time needs

- Error handling strategies

Determine whether you need zero-shot, few-shot, or chain-of-thought capabilities for your application. For tasks requiring transparent reasoning, consider if Claude's Extended Thinking Mode would be beneficial.

Create a prioritized list of requirements based on user impact and technical feasibility. This groundwork ensures your integration serves actual user needs rather than implementing AI for its own sake.

Technical evaluation framework for selecting providers

Develop a structured framework to evaluate LLM API providers. Compare candidates across four key dimensions:

- 1Capabilities

- 2Cost structure

- 3Reliability

- 4Compliance features

For capabilities, benchmark performance on your specific tasks. Test response quality, accuracy, and consistency with representative inputs from your domain. Consider context window size requirements for your application – GPT-4.1 and Gemini 2.5 Pro offer 1 million token windows, while Sonnet 3.7 provides 200,000 tokens.

Examine pricing models in relation to your expected usage patterns:

- 1Calculate potential costs across different user growth scenarios

- 2Look beyond per-token pricing to consider rate limits and enterprise options

Evaluate uptime guarantees, scalability under load, and geographic availability. Consider documentation quality and SDK support for your development stack.

Development and testing protocols

Implement a proof-of-concept with your selected provider before full integration. Use this stage to refine prompt design and validate performance assumptions in your specific context.

Develop comprehensive test suites for validating LLM outputs:

- Include regression tests to prevent performance drift over time

- Create adversarial test cases to identify potential failure modes

Establish clear version control practices for prompts and model configurations. Treat prompts as critical application code with proper documentation and review processes.

Set up A/B testing infrastructure to compare different prompt strategies or providers. Use these experiments to continuously improve response quality and reduce costs.

Monitoring architecture and continuous improvement

Design a robust monitoring system to track LLM API performance in production. Capture key metrics including:

- Latency

- Token usage

- Error rates

- Throughput

Implement automated alerts for anomalies in performance or unexpected responses. Create dashboards that visualize trends over time to identify gradual degradation.

Collect user feedback systematically to evaluate real-world effectiveness. Use this data to identify gaps between benchmark performance and actual user satisfaction.

Establish a continuous improvement cycle with regular review of monitoring data. Create a feedback loop where production insights inform prompt refinements and API integration changes.

With this comprehensive roadmap in place, your team is well-positioned to successfully implement LLM APIs in your product strategy.

Conclusion

Successfully integrating LLM APIs into your product strategy requires balancing technical considerations with clear business objectives. The strategic advantages—from accelerated development cycles to enhanced user experiences—make these tools invaluable for forward-thinking product teams, but implementation must be approached methodically.

The technical implementation pathway matters significantly. Establishing robust API gateways, optimizing token usage, implementing effective caching, and maintaining proper security protocols directly impact both performance and cost efficiency. Cross-functional collaboration between product, engineering, security, and compliance teams is essential for successful deployment.

For product managers, this means carefully defining use cases where LLMs truly add value and establishing clear metrics to measure success. Engineering teams should focus on developing robust testing frameworks, monitoring systems, and optimization strategies to maintain performance while controlling costs. Leadership should recognize that effective LLM integration represents not just a technical improvement but a strategic competitive advantage.

As LLM capabilities continue to evolve rapidly, maintaining a structured approach to evaluation, implementation, and continuous improvement will ensure your products remain at the forefront of AI-enhanced experiences. The organizations that master this balance between technological potential and practical implementation will define the next generation of intelligent products.