Tracing is no longer a “nice to have.” If your agent makes three tool calls, hits retrieval, and then responds, you need to know what happened at each step, what it cost, and whether the result met your quality bar.

The harder problem is what comes next. Once you can observe failures, you still need a disciplined way to fix them: reproduce the issue on a dataset, evaluate changes, and release prompt updates without guessing.

Weights & Biases Weave (often called “Weave”) is a strong option for tracing and evaluation, especially for teams already invested in W&B. Adaline covers tracing and evaluation too, but it goes further by treating prompts as deployable artifacts with environments, promotions, and rollback—so debugging connects directly to what is live.

How We Compared Adaline And Weave

- Tracing depth: Multi-step agent visibility, spans, and context.

- Evaluation coverage: Datasets, LLM-as-a-judge, custom scorers, and reporting.

- Production debugging loop: How fast can you turn failures into test cases?

- Release governance: Version control, environments, approvals, and rollback.

- Cross-functional usability: Can product and engineering operate in the same system?

1. Adaline

Adaline's Editor and Playground allow you to engineer prompts and test them with various LLMs.

Adaline is the collaborative platform where teams iterate, evaluate, deploy, and monitor LLM prompts. It is designed to replace fragmented prompt workflows with a single system of record that connects experimentation to production.

Adaline Context for Prompt

What Adaline is Designed For

Evaluation results from testing 40 user queries on a custom LLM-as-Judge rubric.

- Dataset-based testing (CSV/JSON) so evaluations reflect real user inputs.

- Built-in and custom evaluators, including LLM-as-a-judge, regex/keyword checks, and JavaScript/Python logic.

- Tracking operational metrics like latency, token usage, and cost alongside eval outcomes.

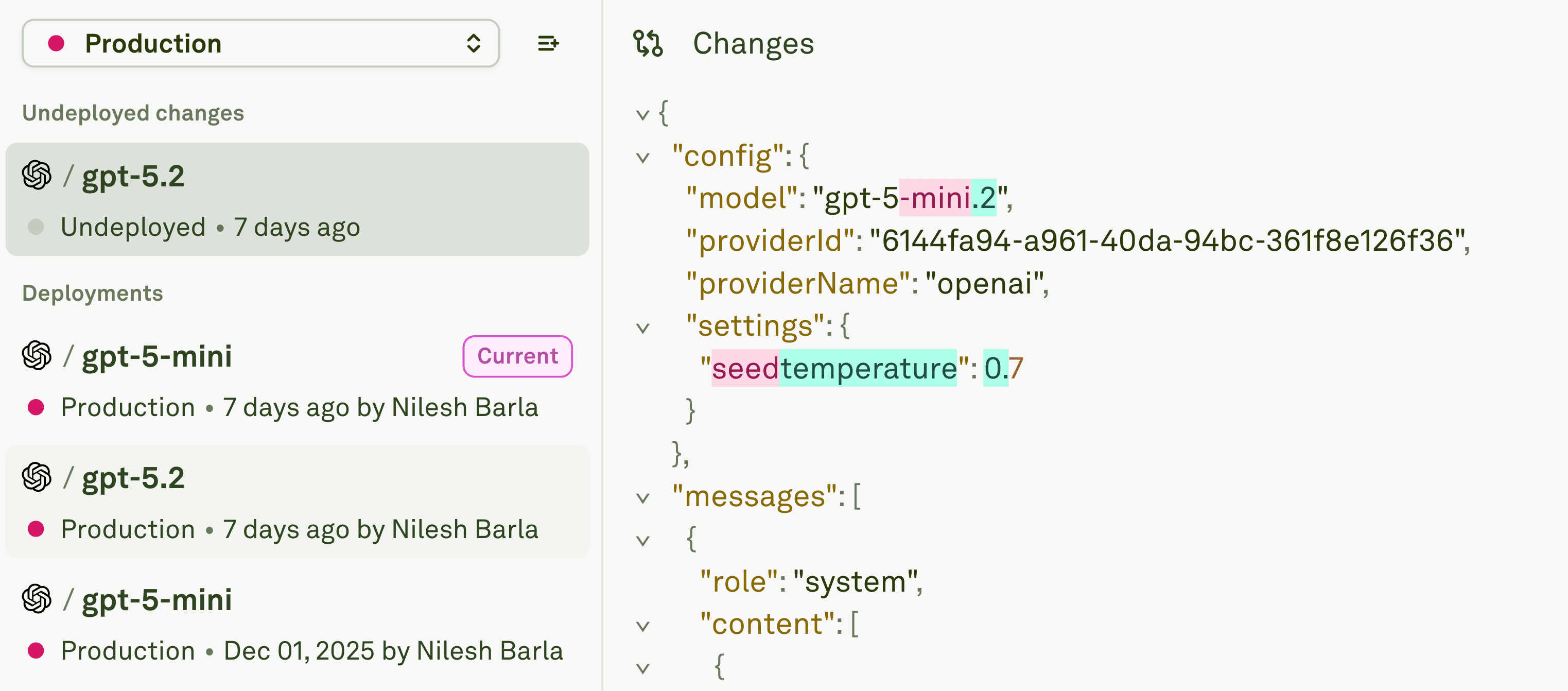

- Prompt release governance: version history, Dev/Staging/Production environments, promotion, and instant rollback.

- Production observability with traces and spans, plus continuous evaluations on live traffic samples.

Key Point

Adaline allows users to restore or rollback any of the previously used and tested prompts with a single click.

Adaline’s differentiator is not just “seeing traces.” It is that prompt changes are managed like deployments: you can test in staging, promote to production, and roll back quickly when costs or quality move in the wrong direction.

Best For

Teams that want one workflow for debugging, evaluation, and controlled prompt releases—without stitching together separate tools.

2. W&B Weave

W&B Weave positions itself as an observability and evaluation platform for LLM applications, focused on tracking, debugging, and scoring LLM behavior.

What Weave Is Designed For

- Code-level tracing: instrument function calls and associate traces with W&B runs (for example via decorators).

- Debugging LLM app behavior by inspecting inputs, outputs, and execution traces.

- Evaluation workflows with LLM judges and custom scorers.

- Prompt and model experimentation in a visual Playground, including comparing models and testing against production traces.

- An open-source core and SDK ecosystem (Python/TypeScript).

Key Point

Weave is excellent when your main bottleneck is observability and debugging, especially if you already rely on W&B for experiments and tracking. It gives you a tight trace → inspect → evaluate loop inside a familiar ecosystem.

Best For

Engineering teams that want W&B-native tracing and evaluation, plus a prompt playground for rapid experimentation.

Where Adaline Tends To Be a Better “Weave Alternative”

Both products help you trace and evaluate LLM applications. The difference is whether production debugging ends with “we found the issue,” or with “we shipped a governed fix.”

If your team ships prompt updates frequently, you need release controls that make debugging actionable:

- Which prompt version is live right now?

- What changed between the last good run and the current regression?

- Can we promote a tested version safely, and roll back instantly if costs spike?

Adaline’s model is built around those questions: version control for prompts, environment promotion, and rollback as standard operations.

Feature Comparison At a Glance

A Production Debugging Workflow That Actually Prevents Regressions

A reliable loop has five steps:

- Capture failures as traces (including context, tool calls, and latency/cost).

- Convert those failures into a dataset.

- Define evaluators that reflect your product’s acceptance criteria.

- Run evaluation against the candidate fix.

- Release the fix with guardrails: staged promotion and fast rollback.

Weave supports strong versions of steps 1–4: tracing, inspection, playground-based iteration, and evaluations with judges and scorers.

Deploy a prompt from Adaline to any environment.

Adaline covers those steps, but it also hardens step 5 by treating prompts as deployable assets with environments and rollback, so the “fix” is traceable to what is actually live.

Conclusion

If your primary problem is “we cannot see what the model is doing,” Weave is a serious contender, particularly for teams already standardized on W&B.

If your primary problem is “we can see failures, but we still ship prompt changes unsafely,” Adaline is the better alternative. It connects tracing and evaluation to a governed prompt release process: test, promote, and roll back with confidence.

Frequently Asked Questions (FAQs)

Is W&B Weave an observability tool or an evaluation tool?

It is both: Weave LLM observability into markets for debugging and evaluation using LLM judges and custom scorers.

Does Adaline support multi-step agents and multi-turn conversations?

Yes. Adaline tracks traces across multi-step agent workflows and can evaluate at the final output or intermediate steps.

What is the biggest difference between Adaline and Weave?

Adaline emphasizes PromptOps: version control, environments, promotion, and rollback for prompts, so evaluation results map directly to what is live.

Can I use Weave for prompt experimentation?

Yes. Weave offers a Playground designed for experimenting with prompts and models, including comparisons and testing against production traces.

When should I choose Adaline over Weave?

Choose Adaline when prompt releases are frequent or high-stakes, and you need controlled deployments, auditability, and rollback as defaults—not as a separate process.