Prompt chaining transforms how we interact with Large Language Models by breaking complex tasks into sequences of smaller, interconnected prompts. Unlike overwhelming an LLM with a single complex instruction, this technique creates a structured workflow where each prompt’s output becomes input for the next. The result? Significantly improved accuracy, reliability, and contextual understanding from your AI systems.

The technique addresses fundamental LLM limitations including context windows, hallucinations, and complex reasoning challenges. By implementing modular prompt structures - whether linear, branching, or dynamic - you gain precise control over each step while maintaining crucial context throughout the interaction process. This structured approach dramatically improves troubleshooting capabilities and ensures higher quality outputs.

For product teams building AI-powered applications, prompt chaining offers concrete benefits: enhanced output quality, simplified debugging, better maintainability, and more predictable performance. These advantages translate directly to more reliable products, faster iteration cycles, and improved user experiences.

- 1Core principles and technical architecture

- 2Implementation patterns: linear, branching, and dynamic chains

- 3Comparison with Chain of Thought and other techniques

- 4Task decomposition and error handling strategies

- 5Framework evaluations and production optimization

- 6Integration with RAG for enhanced factual accuracy

1. What is prompt chaining

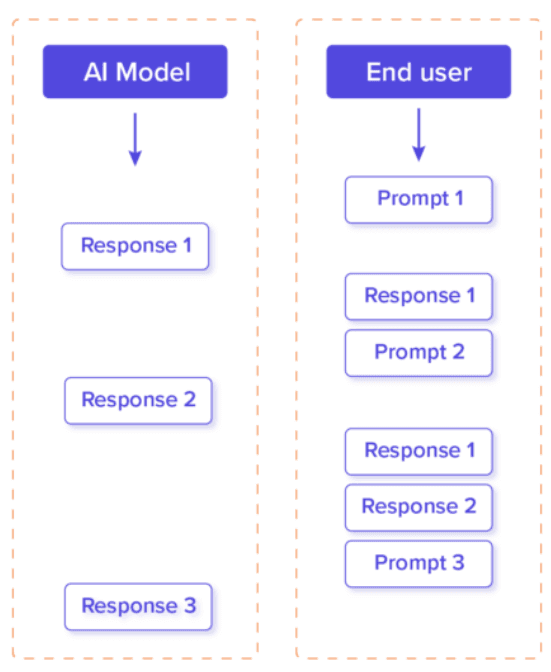

Prompt chaining is a technique that breaks complex tasks into sequences of smaller, interconnected prompts. Each prompt in the chain produces an output that becomes input for the next prompt. This methodical approach enhances LLM performance and reliability.

Illustration of how prompt chaining works | Source: Prompt Chaining Introduction and Coding Tutorials

1.1. Understanding context-maintenance

Language models need proper context to generate meaningful responses. Human conversations become confusing without context. LLMs face similar challenges. Prompt chaining preserves context throughout interactions. It connects smaller, focused prompts in sequence. This structure keeps the LLM aligned with the intended direction.

1.2. Breaking down complex tasks

Rather than overwhelming an LLM with a single complex prompt, prompt chaining divides the task into manageable segments. Each response from one prompt becomes valuable input for the next, creating a logical progression toward the desired outcome. This sequential approach significantly improves the accuracy and relevance of the LLM's outputs.

1.3. Managing context length limitations

Every LLM has built-in restrictions on input length. When dealing with sophisticated scenarios, fitting all instructions into a single prompt becomes impractical. Prompt chaining offers a solution by distributing instructions across multiple connected prompts while maintaining contextual continuity.

1.4. Preventing context hallucinations

Complex problems often risk producing inaccurate or inconsistent responses, particularly when outputs build upon previous responses. Prompt chaining helps prevent these "hallucinations" by maintaining strict context control throughout the interaction sequence.

1.5. Simplified troubleshooting

When issues arise, prompt chaining makes problem identification and resolution more straightforward. By segmenting the interaction into distinct steps, developers can quickly isolate and fix problematic prompts. This modular approach significantly reduces debugging time and improves overall system maintenance.

These foundational principles establish why prompt chaining has become an essential technique for developers working with advanced language models, providing both practical benefits and structural advantages over single-prompt approaches.

2. The technical architecture of prompt chains

Now that we understand the core principles let's examine how prompt chains are structured from a technical perspective and why this architecture delivers significant advantages.

2.1. Breaking down complex tasks

Prompt chaining is a methodology that decomposes complex tasks into sequential prompt components. This technique improves reliability by converting overwhelming requests into manageable subtasks. Each subtask becomes a separate prompt. The output from one prompt serves as input to the next. This creates a chain of operations that methodically addresses the original complex task.

The structured approach allows Large Language Models (LLMs) to maintain focus. They can handle one cognitive challenge at a time. This prevents context overload that often occurs with detailed single prompts.

2.2. Modular design patterns

The implementation framework for prompt chains follows modular design principles. Each component in the chain has distinct responsibilities with clear inputs and outputs. This modularity enables developers to replace or refine individual components without disrupting the entire chain.

Common architectural patterns include:

- Linear chains: Sequential processing where each prompt directly feeds into the next

- Branching chains: Conditional pathways based on specific outputs

- Recursive chains: Iterative refinement loops that continue until conditions are met

- Parallel chains: Independent processes that execute simultaneously

This modular architecture enhances troubleshooting. Developers can isolate errors to specific chain segments rather than debugging entire prompt sequences.

2.3. Token optimization strategies

Token usage optimization is a critical consideration in prompt chain design. Each prompt in the chain consumes tokens, potentially increasing operational costs. Effective prompt chains balance comprehensiveness with efficiency.

Key optimization techniques include:

- Minimizing context repetition between chain segments

- Using precise instructions that require fewer tokens

- Implementing selective context retention

- Employing specialized models for different chain segments

The impact on operational costs can be significant. While prompt chains may increase the total token count, they often improve output quality and reduce the need for additional refinement requests. This results in more cost-effective interactions over complete workflows.

Through careful architectural design, prompt chains transform complex reasoning tasks into structured processes that enhance LLM performance while maintaining operational efficiency.

3. Linear prompt chain implementation

With the technical architecture of prompt chains now established, we can dive into the most straightforward implementation pattern: the linear prompt chain. This approach provides an excellent starting point for understanding how to structure and execute prompt chains effectively.

3.1. Breaking down complex tasks

Linear prompt chaining involves executing a sequence of focused prompts where each output serves as input for the next step. This approach divides complex tasks into manageable components. When implementing a linear chain, each prompt handles one specific function rather than attempting to process everything simultaneously.

The sequential workflow typically follows a logical progression. The first prompt might extract information or create an outline. Subsequent prompts build upon previous outputs, refining and transforming the content at each stage.

3.2. Python implementation example

Here's a basic linear prompt chain implementation in Python:

This implementation creates a two-step chain. First, it generates an outline. Then it expands the outline into complete content.

3.3. Performance benefits and use cases

Linear prompt chains excel in structured tasks requiring progressive refinement. By focusing each model interaction on a specific subtask, they deliver several advantages:

- 1Improved accuracy through focused processing

- 2Better context retention across multiple steps

- 3Enhanced explainability with traceable transformations

- 4Greater control over the final output quality

Content creation workflows benefit significantly from this approach. A chain might generate an outline, draft sections, refine language, and finally optimize for SEO. Report generation similarly benefits from structured, step-by-step processing.

Your implementation strategy should match the complexity of your task. Simple workflows may only need two or three steps, while comprehensive processes might require longer chains with validation checks between steps.

Now that we understand the basics of linear chains, let's explore more complex implementation patterns that can handle more sophisticated reasoning tasks.

4. Branching chains: Decision-tree architectures

While linear chains follow a straightforward sequence, many real-world scenarios require more complex decision-making capabilities. Branching chains address this need by implementing conditional logic that adapts to different circumstances and outputs.

Branching chains introduce conditional logic into prompt chain workflows. In this architecture, the path through prompts depends on previous response outputs, creating a tree-like structure with multiple possible routes.

4.1. Applications in complex decision processes

Branching chains excel in scenarios with varying decision paths. Customer service automation benefits from this approach. The AI navigates different troubleshooting routes based on identified issues. The system adjusts dynamically as it processes customer inputs.

Scenario-based problem-solving leverages branching chains effectively. Each branch explores different aspects of the original query. This generates outputs tailored to specific situations. The method works well when inputs have multiple interpretations.

4.2. Implementation with modern frameworks

Popular tooling like LangChain provides robust support for branching chain implementations. These frameworks help manage the complex logic and transitions between different prompt pathways.

The implementation typically involves:

- 1A primary prompt that analyzes the initial input

- 2Decision nodes that evaluate conditions

- 3Branch selection based on predetermined criteria

- 4Separate prompt sequences for each branch

Branching architectures require clear "if-then" logic definitions to shape future outputs correctly.

4.3. Performance benefits and considerations

Branching chains show significant advantages in handling complex data structures. They enable more nuanced decision-making by exploring multiple solution paths simultaneously.

The design helps models maintain context across different branches while improving the handling of ambiguous queries. Each branch can focus on specific aspects of a problem, resulting in more targeted outputs.

However, careful monitoring is necessary. The additional complexity of branching architectures can increase the risk of errors propagating through the system. Testing should verify that decision points correctly route queries to appropriate branches.

For diagnostic systems especially, branching chains demonstrate superior performance compared to linear chains by adapting dynamically to new information as it's discovered.

Building upon these concepts, we can now explore even more sophisticated chain structures that adapt and evolve during execution.

5. Dynamic and self-modifying chain structures

Taking prompt chain architectures to their most advanced form, dynamic and self-modifying chains represent the cutting edge of adaptive AI systems. These sophisticated structures go beyond preset pathways to create truly responsive workflows.

5.1. Technical architecture for dynamic prompt chains

Dynamic prompt chains offer flexible solutions to complex problems. They adjust automatically based on previous outputs. Unlike static chains, they evolve during execution. They adapt to changing requirements in real-time.

These chains use feedback mechanisms to modify their structure. The system evaluates outputs at each step. It then determines the next optimal prompt. This creates responsive workflows that handle unpredictable scenarios.

The architecture needs careful planning of decision points. The chain might branch into different paths at these points. Each potential path must maintain context. It must also address specific task variations.

5.2. Implementation methods for adaptive workflows

Implementing dynamic chains relies on robust feedback loops. These analyze the quality and relevance of each output before selecting the next prompt in the sequence.

Developers can create adaptive workflows using several approaches:

- Output classification systems that categorize results and trigger appropriate follow-up prompts

- Confidence scoring mechanisms that measure response quality and adjust accordingly

- Error detection algorithms that identify problems and activate recovery paths

- Context retention systems that maintain information across dynamic shifts

Self-modifying chains go further by allowing the system to rewrite its own prompts during execution. This creates truly autonomous workflows that can optimize themselves over time.

5.3. Parallel processing techniques

When chains grow complex, latency becomes a critical concern. Parallel processing addresses this by running independent segments simultaneously rather than sequentially.

One effective approach identifies chain segments with no interdependencies. These can execute in parallel, with results merged later at synchronization points. This significantly reduces overall completion time.

Parallel chains require careful state management to prevent conflicts when results are combined. Well-designed synchronization mechanisms ensure data consistency while maintaining the speed advantages of parallel execution.

The most sophisticated implementations use dynamic resource allocation, directing computing power to different chain segments based on complexity and priority.

6. Chain of thought vs. prompt chaining: Technical comparison

Both Chain of Thought and prompt chaining aim to improve LLM capabilities, but they take fundamentally different approaches. Understanding the technical distinctions between these techniques will help you select the optimal strategy for different scenarios.

6.1. Architectural differences

Chain of Thought (CoT) and prompt chaining represent two distinct approaches to enhancing LLM capabilities. CoT incorporates reasoning steps within a single prompt, guiding the model through logical thinking processes to reach conclusions. Prompt chaining, conversely, breaks tasks into sequential prompts where each output feeds into the next prompt as input.

CoT focuses on solving complex problems via detailed reasoning in one prompt. It excels in tasks requiring logical clarity like math problems and multi-step analysis. Prompt chaining refines tasks through multiple, interconnected prompts. This approach works well for content creation, debugging, and iterative learning.

6.2. Performance trade-offs

The flexibility between these techniques varies significantly. Prompt chaining offers high adaptability with independently adjustable steps. If one part isn't perfect, you can easily modify it without reworking the entire process. CoT has more limited flexibility since errors require re-evaluation of the entire reasoning chain.

Computational costs differ too. CoT tends to be more resource-intensive due to detailed reasoning in a single prompt. Prompt chaining typically requires lower computational resources as it utilizes simpler prompts executed sequentially, though it may require multiple API calls.

6.3. Implementation considerations

Error handling represents another key distinction. With prompt chaining, errors are easier to correct at each prompt stage. The modular design allows for focused troubleshooting. CoT requires reworking the entire prompt when reasoning errors occur.

In practical application, prompt chaining depends on individual prompts while CoT demonstrates more autonomy through comprehensive reasoning. For complex tasks, combining both methods often yields the best results. Structure a task with prompt chaining, then apply CoT for detailed reasoning within specific steps.

6.4. When to choose each approach

Select prompt chaining for iterative tasks requiring multiple drafts or component-based problems needing refinement. Choose CoT for complex reasoning challenges and multi-step problem solving that benefits from transparent logic.

Understanding when to apply each technique allows you to achieve more accurate, organized outcomes with prompt engineering.

With a clear understanding of the differences between these techniques, let's next explore how to effectively break down complex tasks when designing prompt chains.

6.5. Comparative case study

A 2023 industry benchmark tested both approaches on complex reasoning tasks. Prompt chaining achieved 87% accuracy on multi-step logic problems. Chain of Thought scored 79% on identical problems. The prompt chain required more API calls but showed 30% faster development time. Debugging efforts decreased by 45% with prompt chains versus CoT. This practical comparison reinforces when each approach delivers optimal results.

7. Task decomposition strategies for effective chain design

Creating successful prompt chains begins with properly breaking down complex tasks into manageable components. This process of task decomposition is critical for designing chains that process information efficiently and produce high-quality outputs.

7.1. Breaking complex tasks into meaningful parts

Task decomposition is essential for prompt chain success. Identifying optimal segmentation points ensures each step focuses on a specific function. Proper decomposition reduces cognitive load on the model while improving overall performance.

Start by analyzing your task to determine logical break points. Consider splitting at points where the nature of processing changes—from extraction to summarization, or from analysis to refinement.

Look for natural transitions in your workflow where output from one step becomes meaningful input for the next.

7.2. Context handoff patterns

Effective context handoff between chain components is crucial for maintaining coherence. When designing handoffs, focus on passing only essential information needed for the next step.

Keep handoffs clean by structuring data consistently throughout the chain. This might involve:

- Using standardized formats like JSON for structured data transfers

- Explicitly stating what information should be carried forward

- Trimming redundant information that won't be used downstream

Single-sentence paragraphs serve as important transitions between chain components, connecting each step logically.

7.3. Validation checkpoints for error reduction

Implementing validation checkpoints between chain steps significantly reduces error rates. These checkpoints verify that each step's output meets quality standards before proceeding.

Validation can take several forms:

- Consistency checks to ensure outputs match expected formats

- Relevance verification to confirm outputs address the intended task

- Completeness assessment to ensure no critical information is lost

When errors are detected, fallback mechanisms can be triggered to recover gracefully rather than propagating mistakes through the entire chain.

Incremental validation makes troubleshooting simpler as issues can be isolated to specific chain components instead of requiring end-to-end debugging.

Effective task decomposition lays the foundation for robust prompt chains, but equally important is implementing proper error handling throughout the system.

8. Error handling and validation in prompt chains

Even with careful task decomposition, prompt chains require robust error handling and validation mechanisms to maintain reliability. These systems ensure that errors don't propagate through the chain and that outputs remain consistent and accurate.

Error handling and validation are critical components of effective prompt chain implementation. These mechanisms help prevent cascading failures and ensure reliable outputs across the entire workflow.

8.1. Building validation layers

Implementing validation layers at each step of a prompt chain significantly improves error detection. These layers verify that the output from each prompt meets expected criteria before proceeding to the next step. This focused approach makes troubleshooting more efficient.

Strong validation checks can detect issues like:

- Missing information in prompt outputs

- Inconsistent formatting or structure

- Context loss between chain steps

- Factual inaccuracies or hallucinations

8.2. Preventing cascading failures

When errors occur in early stages of a prompt chain, they can propagate throughout the entire workflow. A well-designed error handling architecture includes:

- Incremental validation checks after each step

- Clear error messages that identify specific failure points

- Fallback prompts that activate when primary prompts fail

- Automatic retry logic for recoverable errors

This structured approach contains problems at their source rather than allowing them to compound through the chain.

8.3. Optimizing for robustness

Performance optimization is essential for maintaining prompt chain reliability over time. Chains should be designed with:

- Input validation that ensures data quality before processing

- Context retention mechanisms to prevent information loss between steps

- Conditional logic that handles edge cases and exceptions

- Parallel processing for independent subtasks to improve efficiency

A single-sentence validation prompt after each major step can dramatically improve overall chain reliability.

8.4. Error mitigation strategies

The most effective prompt chains incorporate multiple error mitigation techniques. These include testing alternative prompt formulations when errors occur, implementing automatic error correction, and maintaining a comprehensive log of failures for continuous improvement.

With proper error handling and validation, prompt chains become significantly more robust and reliable, making them suitable for production environments where consistent performance is essential.

When implementing prompt chains at scale, selecting the right framework becomes a crucial consideration for success.

9. RAG integration with prompt chains

Combining prompt chains with Retrieval Augmented Generation (RAG) creates powerful systems that leverage both structured reasoning and factual information retrieval. This integration represents an important advancement in building AI systems that are both intelligent and factually accurate.

9.1. Enhancing LLM performance with combined techniques

Retrieval Augmented Generation (RAG) and prompt chaining work powerfully together to improve LLM reliability. This integration connects document retrieval capabilities with structured prompt sequences. By incorporating retrieved information at specific points in a prompt chain, systems deliver more accurate, contextually aware responses.

9.2. Implementation methodology

A typical RAG-enhanced prompt chain follows a multi-step process:

- 1The initial prompt triggers information retrieval from external knowledge sources

- 2Retrieved content is incorporated into subsequent prompts in the chain

- 3Each step builds upon previous context while maintaining factual grounding

This architecture is particularly valuable for knowledge-intensive applications where hallucination risks are high. Breaking complex tasks into smaller, knowledge-grounded steps ensures information stays relevant throughout the chain.

9.3. Performance benefits

RAG integration with prompt chains significantly reduces hallucinations. LLMs access external knowledge at each step. This reduces reliance on potentially inaccurate internal knowledge.

Key benefits include:

- Improved context-maintenance across complex reasoning tasks

- Enhanced factual accuracy in multi-step generations

- Better transparency with citeable information sources

- Reduced error propagation between chain steps

Organizations report more reliable responses with this approach. This is especially true for domain-specific queries. These queries require both fact retrieval and complex reasoning.

10. Performance optimization for production deployments

Moving prompt chains from experimental to production environments requires careful optimization to manage costs, latency, and resource utilization effectively. These practical considerations are essential for scaling prompt chain implementations.

10.1. Latency optimization techniques

Prompt chaining architectures present unique challenges for production environments. Breaking down complex tasks into smaller, focused steps improves accuracy but may increase overall response time. To minimize latency:

- Structure prompt chains with parallel execution where possible

- Utilize smaller, faster models for intermediate chain steps that don't require advanced reasoning

- Implement caching mechanisms for common intermediate outputs

A single optimization can reduce response times by up to 90% in high-volume applications.

10.2. Token usage management

Each prompt in a chain consumes tokens, directly impacting operational costs. Effective token management strategies include:

- Carefully design prompts to be concise yet complete

- Limit context passing between chain components to essential information

- Consider token allocation across the chain, prioritizing steps that benefit most from detailed context

These approaches ensure optimal performance without sacrificing quality or increasing expenses unnecessarily.

10.3. Multi-model integration patterns

Not every step in a prompt chain requires the same model capabilities. Strategic integration of different models creates an optimal balance:

- Use lightweight models for simple extraction or classification tasks

- Reserve advanced models for complex reasoning or generation steps

- Implement automatic model selection based on task complexity

This tiered approach reduces costs while maintaining high-quality outputs. It's particularly effective for customer-facing applications where response time directly impacts user experience.

10.4. Performance monitoring

Implement robust monitoring across all chain components to identify bottlenecks. Track:

- Per-step latency metrics

- Token consumption patterns

- Success rates for each chain component

Regular performance audits help maintain optimal operation as usage patterns evolve.

While prompt chaining offers significant benefits, it's important to understand its limitations and challenges to implement it effectively.

11. Technical limitations and engineering challenges

Despite its many advantages, prompt chaining is not without challenges. Understanding these limitations is essential for making informed implementation decisions and developing strategies to mitigate potential issues.

11.1. System complexity overhead

Implementing prompt chains increases overall system complexity. This expanded architecture creates more potential failure points for LLMs. Context loss between steps and misaligned outputs become more common risks. The additional complexity requires robust error handling mechanisms to maintain reliability in production environments.

11.2. Error propagation risks

Errors in early prompts can cascade throughout the entire chain. Each prompt in the sequence builds upon previous outputs, potentially amplifying initial mistakes. This creates particular challenges when implementing prompt chains for mission-critical applications. Effective mitigation strategies include implementing validation steps between prompts and building fallback mechanisms for error recovery.

11.3. Performance impact assessment

Prompt chaining introduces significant performance considerations. Each prompt requires its own LLM API call, potentially increasing overall costs. Processing time inevitably increases with multiple sequential calls. Response latency can become a critical issue for time-sensitive applications.

Additionally, prompt chain outputs tend to be longer than monolithic prompts. This increases token usage and can further impact both performance and costs. Teams must carefully balance the benefits of improved accuracy against these performance considerations.

11.4. Single point of failure

The first prompt in a chain is particularly critical. A flawed initial prompt creates a cascade of issues throughout the entire system. Thoroughly testing and validating this entry point is essential for building reliable prompt chain implementations.

Human-in-the-loop validation can provide an effective safeguard for complex production systems, allowing for error correction before issues propagate through the chain.

By understanding and planning for these challenges, development teams can leverage prompt chaining's benefits while minimizing its potential drawbacks.

Conclusion

Prompt chaining represents a paradigm shift in how we leverage LLMs for complex tasks. By breaking problems into sequential, manageable steps, we gain significant control over outputs while maintaining essential context throughout the interaction. This structured approach dramatically improves reliability, reducing hallucinations and enabling more precise troubleshooting.

The technical implementation requires careful consideration of chain architecture, validation mechanisms, and performance optimization. Whether using linear chains for straightforward workflows or branching structures for decision-based tasks, the modular design allows for targeted improvements and easier maintenance. Integration with techniques like RAG further enhances factual accuracy.

For product managers, prompt chaining enables clearer product roadmaps with more predictable feature development. AI engineers benefit from improved debugging capabilities and more maintainable systems. For startup leadership, this technique represents a strategic advantage - delivering more reliable AI products with reduced development cycles and lower operational costs.

While prompt chaining adds complexity, the benefits of improved accuracy, reliability, and user experience make it an essential technique in the modern AI toolbox.